Using Envoy to route internal requests

When Asana users interact with the web app, their browser makes requests to Asana’s backend servers to get the data the user needs. But plenty of data needs to be moved between backend services to return responses to the user. This blog post will discuss how we serve those internal requests with Envoy, a distributed service proxy.

Background: Choosing Envoy

In Asana’s initial design, all data was provided by a JavaScript monolith. We started splitting this monolith into separate services as a way to achieve performance gains at scale and to improve development velocity by enabling independent deployment cycles. Code that called into these new services now had the responsibility to identify the destination of its requests. Then in late 2019, Asana deployed new infrastructure in Frankfurt, Germany to allow enterprise customers to host their data in the EU.

Designing for infrastructure hosted in a second AWS data center highlighted a number of new requirements for our architecture:

- Callers needed to be oblivious to how requests are routed: As Asana’s infrastructure grew, systems became more modularized, increasing the number of services. Each caller was expected to be aware of each service, which required a lot of code to write and maintain.

- We needed a more reliable service discovery mechanism: Callers were getting information about each service from a centralized configuration source, which wasn’t always easy to update dynamically as service endpoint addresses changed.

- Transmission between data centers needed to be secure: As Asana deployed new infrastructure in a different global region, we needed to ensure that external parties could not intercept data sent between regions.

Envoy addressed these initial needs and because of these and other benefits, we eventually adopted it as our primary internal request router. In this blog post, we’ll discuss how our Envoy/service-oriented architecture enables us to remove routing logic from the caller, do reliable service discovery, and handle automatic traffic encryption and decryption for cross-region requests, and how these benefits in turn have simplified our architecture and allowed us to scale up our infrastructure more effectively.

Request flow: What the caller knows

While much of Asana’s web app is hosted on EC2 instances, Asana also has several backend services deployed in Kubernetes containers using our KubeApp framework. These services typically communicate with each other using Google’s Remote Procedure Calls (gRPC), a protocol which deploys composite type definitions with code for both callers and servers. gRPC is supported in all of the languages we use at Asana, so using this protocol removes language interoperability issues and simplifies request serialization.

So what does a request look like? Each request a caller sends is responsible for three pieces of information: the request body, destination service method, and the region to which the request is being sent. Once these have been specified, the request is sent to an Envoy process. For callers hosted on our EC2 instances, Envoy is run as a local process. For requests that originate in a Kubernetes container, the request data is sent to an Envoy process which is deployed in a separate (“sidecar”) container in the same Kubernetes pod.

In either case, once the caller creates the request and provides the relevant information, Envoy abstracts away the routing implementation. Once the destination service receives the request, we complete some work and return a response to Envoy, which in turn delivers it to the caller. This means that developers can request data between our backend services without worrying about where the data comes from.

As an example, consider a “SchemaUpdateService” that sends schema updates to Asana’s databases. When a schema update is detected, clients of the SchemaUpdateService create a request that instructs databases to update their schemas, ensuring data model changes are accurately reflected in each of our databases. This request is routed to each database via Envoy. Once the SchemaUpdateService receives the request, it can update the databases and return a response to the caller indicating that the changes have been applied.

Configuration: Service mesh + Envoy Management Service

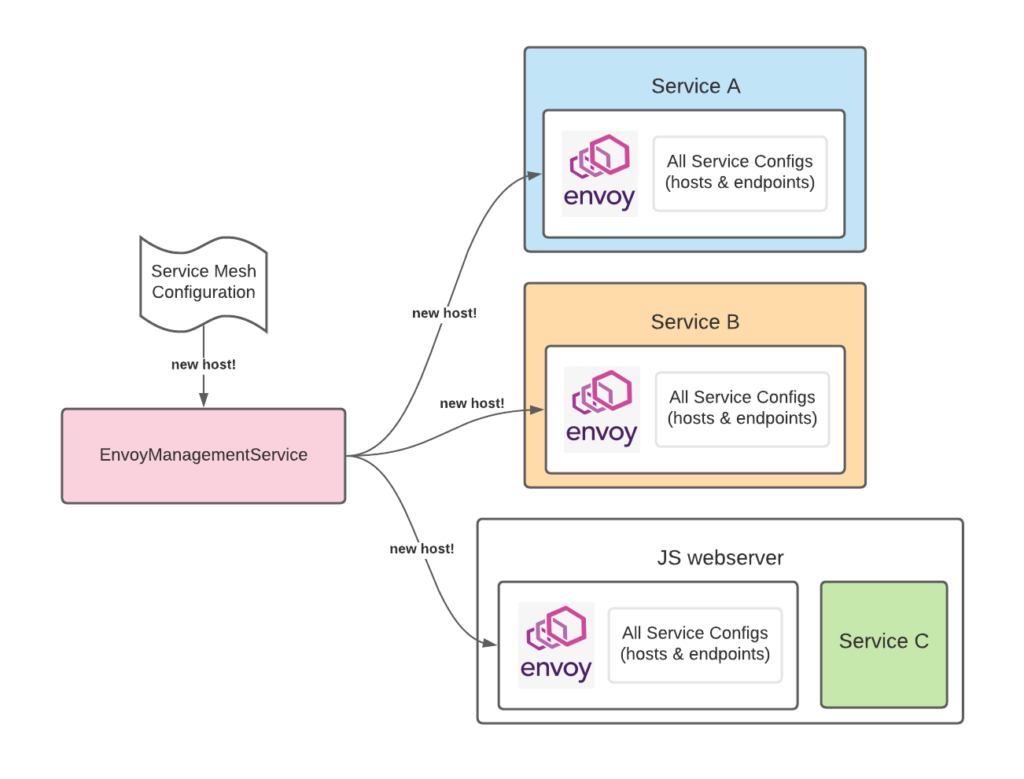

We’ve discussed how Envoy handles individual requests, abstracting away routing concerns from callers. The collection of Envoy processes used to mediate requests is called the service mesh. In this section, let’s explore how we configure and update the Envoy processes that make up the service mesh.

A request that originates within the service mesh can be sent directly to its destination, using Envoy to handle routing details. We also have a service (the “service mesh gateway”) that acts as the ingress point for requests entering the service mesh. The service mesh gateway requires TLS authentication to allow external requests to be sent to their destination service.

Envoy configurations are created and distributed by the Envoy Management Service (EMS), which we built using Envoy’s xDS protocol. When an Envoy resource changes, it sends a request with a version number to EMS. EMS updates the configuration for that resource, and pushes the updated configuration to the rest of the Envoy processes in the service mesh. In this way, EMS does dynamic service discovery and sends available service endpoints to all its subscriber Envoy processes as they are requested.

How EMS keeps production Envoy containers and processes up to date.

Local vs. remote regions: Security and cross-region routing

Once an Envoy process receives a request, it determines whether the destination region is local or remote. We’ve seen that for a local request, Envoy can simply send the request to an endpoint associated with the relevant destination service, as made available via its configuration from EMS. Routing is a bit more involved for cross-region requests. In this case, Envoy must send the request to a service mesh gateway in the remote region.

Here’s how it works: First, requests are created with their corresponding destination service and region. Then the requests are sent to Envoy sidecars deployed in the same pod as the requester. When Envoy gets the request and identifies the destination region as non-local, the request is encrypted, and rather than being sent to the destination service directly, it is directed to the remote gateway. Once a request arrives at the gateway, the gateway decrypts the request and obtains the destination service, and then the request is treated as a local request and routed to the appropriate destination service. In this way, Envoy completely abstracts cross-region routing concerns away from the callers.

An addition to the SchemaUpdateService example, showing how Asana updates database schemas with our service-oriented architecture.

Results of using Envoy to route internal requests

Using a service mesh architecture facilitated by Envoy has thoroughly addressed many of the pain points that initially drew Asana toward this approach: It has reduced the amount of code developers need to write to create requests, made service discovery much less painful, and allowed us to securely send cross-region traffic between our data centers. Envoy has provided us a number of additional benefits as well: Health checking, visibility into our internal networks, a consistent routing layer across heterogenous services, a way to easily configure rate limiting for individual services, robustness to changes in our underlying infrastructure. While working with Envoy has added a bit more complexity in our debugging and testing, we consider these drawbacks to be well worth the cost.

We’re really excited about expanding on our use of Envoy in our infrastructure. Right now, we’re exploring how Envoy can help us improve our rate limiting, integrate with AWS Lambda functions, and build more reliable canary deployments. If you would like to be a part of this work, we’re hiring for our Core Infrastructure area! We’d love to have you join us.